Flick

Flick is a gesture-controlled interface for music streaming on a mobile device.

Project Overview

Redesign an existing music streaming app interface to accommodate a language of hand gestures to allow for touch-free control and legibility when used from a distance. Produce a video demonstration of the app's capabilities using green screen video editing techniques.

CHALLENGE

TOOLS

SKILLS

COURSE

TIMELINE

Figma, Adobe Premiere Pro, Adobe Audition, Adobe Dimension

Wireframing, UI design, sketching, prototyping, video editing

Objects + Space, Graham Plumb

4 weeks, Fall 2020

A video demonstration of Flick.

Problem Statement

THE PROBLEM

My immediate concerns upon starting this project were around the usability and accessibility of a gesture-controlled mobile interface. The screen itself is quite small, and even more so when standing several feet away from it. I found that existing music streaming apps used a lot of small text, which isn't an issue up close, but becomes problematic in the context of this project.

As I tried to envision use cases for this type of product, one that occurred to me was an outdoor gathering type of setting in which you don't want to be bothered to carry your phone around with you. However, as a glasses wearer myself, when I put myself in this position, I realized that I had a hard time making out the small characters on my phone's screen when it was a distance away from me.

DIRECTION

This informed my direction for the design. With gesture-control being so unfamiliar compared to the ubiquitous touch screen, my solution for this project would have to balance two things:

1. The need to onboard the user and teach them not only how to use this interface, but also help them to memorize a set of hand gesture controls, and

2. The need for users to be able to clearly read and interpret screen-based text and icons from a distance.

HMW . . . ?

With this in mind, I generated a "How Might We" statement to guide me in my next steps on the project. I focused on my two main concerns relating to usability.

How might we design a gesture-controlled mobile interface that is simple to learn and legible from a distance?

Research

WHAT IS A GESTURE?

My understanding of gesture prior to starting this project was that it was simply a nonverbal movement of one's body to express a piece of information. However, while conducting research for this project, I learned that gesture and its meaning can be highly subjective and might vary based on one's biases, culture, and past knowledge and experiences. To better understand the range of information that can be conveyed through gesture, I conducted two observation exercises.

1. In the first, I recorded myself as I went about my day. I then watched the recording and captured moments in which I made a gesture. These gestures were then sorted into two categories: intentional and unintentional. Understanding which gestures were made absentmindedly or unconsciously helped gave me insight into which gestures to exclude from my app's controls.

2. My gestures and their meanings were all quite clear to me, but that was to be expected. The next part of this exercise was to observe someone else's gestures to see what movements they naturally use to express certain feelings and pieces of information. For this activity, I took screen captures from an interview on YouTube. I learned that it can be challenging to guess the intended meaning behind a gesture and that what might seem obvious to one person is incomprehensible to another.

Images and descriptions of gestures I observed during my research

DRAWING GESTURES

This left me with more insight into what it meant to create meaning with gestures, but there is another aspect to creating a language of gesture controls: depicting them visually. I chose a gesture – prayer hands – and experimented with different ways to represent it.

Experiments in different ways of representing a gesture.

Process

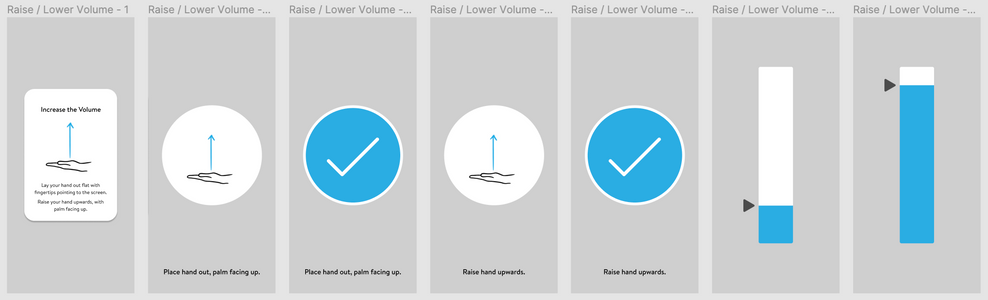

INSTRUCTIONAL DRAWINGS

Of course, there is a list of key functions that a music streaming app needs to have. For the sake of this project, I determined that this app must allow users to: scroll up and down, skip forward and backward, pause and unpause, increase and decrease volume, select albums and songs, and shuffle songs.

ROUND ONE

I quickly sketched 2 options for gesture controls for 4 of the functions I had identified. I then tested these with 2 user testers who are both users of music streaming apps. I was looking to see:

1. Can they physically make this gesture? Is it comfortable and natural?

2. Can they correctly guess what function this gesture would have?

3. Does the meaning of this gesture make sense? Would it be hard to learn?

After learning from user testers, I selected one gesture for each of the functions to move forward with and iterate on.

Sketches for gestures used in user testing session.

ROUND TWO

Having feedback on my rough sketches, I moved on to using Procreate on an iPad Pro to create higher quality versions of my instructional drawings. After creating these, I held another user testing session over Zoom. The tester I was working with was a student who is a serious user of a music streaming app.

I shared my screen presented 1 gesture at a time to my user tester. My goals for this session were the same as before: Can she make this gesture? Is she able to tell what this gesture is for?

Instructional gesture drawings used in the app.

ONBOARDING CONCEPTS

Hearing good feedback on most gestures from both user testers, classmates, and course instructors, I made some small adjustments before moving on to designing the app interface.

My initial challenge focused on onboarding the user and teaching them the gestural language. The primary issue that I grappled with was screen space – or lack thereof. I struggled to find the best way to provide the user with necessary instructions and feedback without taking up too much of the already small screen.

In version 1, I tried placing images, instruction, and semantic feedback in the center of the screen.

In version 2, I tried out different placements for the instructions and tried to incorporate echo feedback.

USER TESTING

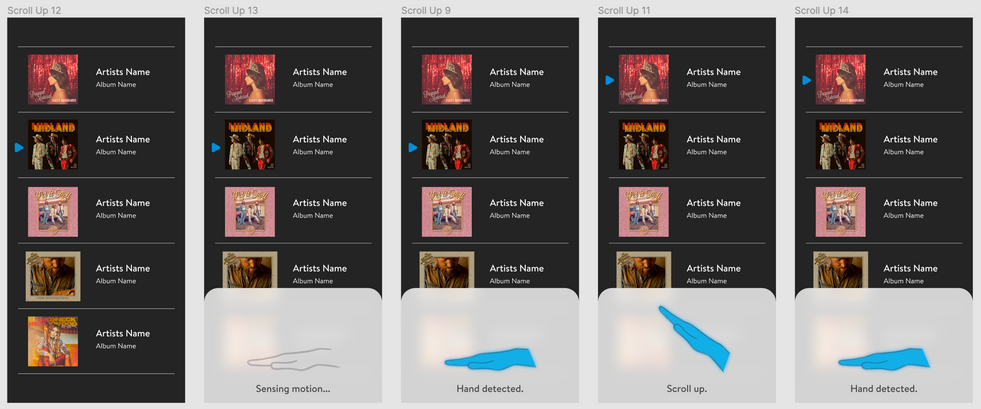

After creating a Figma prototype, I tested it with 3 user testers. There were concerns raised about the design of the gesture for volume control, and there were also issues understanding the echo feedback. However, the testers found the drawings themselves clear to interpret and understand. After making some modifications to one set of gestures, it was time to move on to the next step of the project: making wireframes of an existing music streaming app.

WIREFRAMING

Due to the amount of time the video editing process for this project would take, we were prompted to modify an existing interface rather than create a brand new one. I chose to use Figma modify Apple Music to allow for touch-free control.

My Apple Music wireframes in Figma.

UI DESIGN

At first, I tried to keep the modifications to the design as minimal as possible. My thinking was that since gesture-control is such an unfamiliar concept to many people, learning a brand interface on top of learning how to operate with gestures instead of a touch screen might be too overwhelming for the average user.

However, it quickly became clear that this design did not lend itself to being read and used from a distance. The type was simply too small to make this app usable.

Screens from the first iteration of my UI design.

PIVOT

Hearing feedback from user testers, classmates, and my course instructor, I decided to go back to the drawing board. Usability issues aside, I even felt that there were some issues with the visual design of the app, despite the fact that I didn't make many changes.

1. Items on the screen are too small – album covers, text, and icons alike.

2. The blue color was used to differentiate Flick from the original, standard version of Apple Music, but it ultimately seems to make the connection to the brand weaker in the eyes of users.

To push the design forward, I resized and rearranged elements on the screen, increased text sizes, redesigned some icons, redrew and recolored my instructional hand gesture drawings, and shifted the color scheme back to the original one used by Apple in Apple Music.

Final Deliverable

The final UI design of the app.

ADOBE DIMENSION

At this point, I had a high fidelity prototype of the app built in Figma. But how do I go about presenting it in a way that shows the gesture controls I designed? It would take a few components to put together a video demonstration.

The first step was to design a 3D background in Adobe Dimension. I sourced a 3D model of an iPhone online, and I placed that into the environment. I would later edit the screens I designed to appear on this screen.

Next, I found some free 3D assets online: a picnic table and a water bottle. I arranged them together, and added a stock photo of a campsite in the back. This represented the use case I had designed this app around – an outdoor gathering with music.

The background I designed in Adobe Dimension.

GREEN SCREEN

To show the product in use, I needed to create the illusion of hand gestures controlling the app. To do this, I used a green screening technique. I got a large sheet of green paper and adhered it to a wall. I then set up a camera to record my hand as I went through all of the gestures used with the app.

Once I had this, I was able to bring that footage into Adobe Premiere Pro and remove the green background.

Screens from the video I recorded while doing hand gestures.

VIDEO EDITING

To put the final video together, I worked in Adobe Premiere. I placed my rendering from Adobe Dimension onto the screen, and then overlaid the video of my hand with the green background removed. I took a screen recording of my prototype in use from Figma, and placed that over the phone screen in Premiere.

It took some time, but I was able to go through and carefully trim and rearrange the video of my hand so that the timing of the gestures corresponded appropriately with the timing of the app.

Finally, I added music to create the illusion of the app being a 100% functional prototype. I edited the music to start playing, pause and unpause, and increase and decrease volume at the right moments.

Reflection

WHAT I LEARNED

This was my first real attempt at "Wizard of Oz" prototyping, and I found it both challenging and rewarding. Using only video and digital tools to simulate an in-person experience like this seemed like a big task, but I ended up very pleased with the result of the project.

A really important lesson I learned was simply the value of iteration and soliciting feedback. My designs came a long way from the initial version to the final version, and I wouldn't have achieved that growth if I hadn't been stopping to test with people at every opportunity.

At times, it felt tempting to accept a design that could be better just so I would be able to keep moving on to the next step of the project, but I am glad that I didn't let myself to do that. I learned that I can't expect to "strike gold" right away with a design and that I won't magically get a stroke of genius; the only way to get a good design is to keep pushing it constantly.

This project was also an interesting insight into designing for a "new frontier" in tech. I grew up with touch screens, and most of the screen-based work I've done in the IxD program revolves around touch screens; it was a unique challenge to face trying to understand how to introduce this kind of unfamiliar technology to users. It wasn't just about making an app that looks good and is easy to use like so many other projects are; I had to find a way to teach the user and make them feel comfortable and confident doing something totally foreign to them. It was a fantastic exercise in empathy for a user and their struggles as I worked to minimize the burden on them.

NEXT STEPS

If I were to continue work on this project, I might go back and revise some of the design decisions I made. Some critique I received after submitting this assignment was that the music choice felt strange for the setting; likewise, some people also felt that the setting itself was unclear. With this in mind, I might consider going back and changing these elements, since the confusion that these caused for some viewers definitely detracted from the app and gestures, which ought to have been the focus of the video.